AI Gobbles Water and Energy

Air Date: Week of September 27, 2024

According to researchers at the University of California Riverside a 100 word email generated by an AI chatbot consumes 519 millimeters of water which is a little more than a bottle of water. (Photo: Markus Spiske, Unsplash, Fair Use)

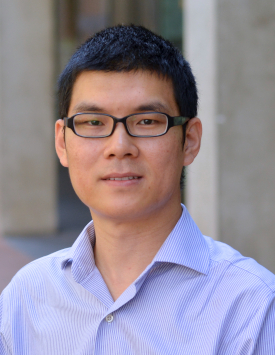

Artificial intelligence is now capable of writing entire books that are coherent, but it takes tremendous amounts of water and energy to make the billions of calculations even basic queries can require. Shaolei Ren, Associate Professor of Electrical and Computer Engineering at UC Riverside joins Host Aynsley O’Neill to discuss concerns about resource-intensive AI systems and possibilities for improvement.

Transcript

DOERING: It’s Living on Earth, I’m Jenni Doering.

O’NEILL: And I’m Aynsley O’Neill.

Artificial Intelligence has become a part of everyday life, but there’s little regulation thus far of the deployment and use of AI. And currently, there’s no law on the books in the US that requires AI companies to disclose their environmental impact in terms of energy and water use. So, concerned researchers rely on voluntary data from companies like Apple, Meta, and Microsoft. But research is showing that AI generation may be even more resource-intensive than originally thought. Imagine that you want to ask an AI program to write up a one-hundred-word email for you. You get an almost instant response, but what you don’t see are the intensive computing resources that went into creating that email. At the AI data center, generating just two of those emails could use as much energy as a full charge on the latest iPhone. And according to a Pew Research Center study, that 100-word email could use up a whole bottle of water for the cooling that’s needed at these data centers. Here to talk more about AI’s energy and water consumption is Shaolei Ren, Associate Professor of Electrical and Computer Engineering at the University of California, Riverside. Welcome to Living on Earth Shaolei!

REN: Thanks for having me.

O'NEILL: For those of us who are unfamiliar with the technical aspects of how AI works, why does it take so much more energy and so much more water than anything else you do on your computer?

O'NEILL: Well, because a large language model is by definition, it's just really large. And what we mean by large is that each model has like several billions of parameters, or even hundreds of billions of parameters. And roughly, if you, let's say you have 10 billion parameters to generate one token or one word, you're going to go through 20 billion calculations. And so, you think about that, you need to press your calculator for 20 billion times. So that's really, really very energy intensive process. And this energy is converted into heat, so we need to get rid of the heat. And water evaporation is one of the most efficient way to cool down the data center facilities. So that's why we also use a lot of water besides the energy. And here, by consumption is what we mean, is the water evaporated into the atmosphere. So sometimes that's considered as a lost water, although it technically is still within our global water cycle system, but it's not available for reuse in the short term in the same source. So, water consumption is really the difference between the water withdrawal minus the water discharge, and that's very different from the water that we use to take a shower. So, when you take a shower, you withdraw a lot of water, but there's not much consumption.

Excited to share our “AI Water” paper has been accepted by Communications of the ACM, the most impactful ACM publication (by IF metric). The peer review took 7 months, but it’s so rewarding to see comments & recognition from the anonymous reviewers! https://t.co/mvvKJTU8CM

— Shaolei Ren (@renshaolei) July 3, 2024

O'NEILL: And from what I understand, in the United States, at least, the water that's used in these AI data centers to do this cooling comes from local or municipal sources. What impact does an AI data center have on the local community around it?

REN: Well, yeah it uses a lot of water from the local municipals and basically, in the US, roughly 80 to 90% of the water consumption for data centers is coming from the potable water sources. And there's not much information or official estimate, but we did some preliminary study, and it shows that currently in the US the data centers water consumption is already roughly two to 3% of the public water supplies, water consumption. So here we're talking about a consumption, not water withdrawal. So basically, that's the evaporated water into the atmosphere. And based on the estimate by EPRI for the future AI energy demand by 2030 this number could go up to 8%. So, I think that's really a lot.

O'NEILL: And there's this ongoing debate in Memphis, where tech billionaire Elon Musk is trying to build a massive server to accommodate AI. And the local utility estimates that this system is going to need something like a million gallons of water per day to cool it. From your perspective, how should local communities weigh the benefits versus the cost of having these local AI data centers?

Computing infrastructure is a high consumer of user water and power. Pictured above is a data center in Virginia Tech University. (Photo: Christopher Bowns, Wikimedia Commons, CC BY-SA 2.0)

REN: I think, of course, there are benefits, especially in terms of the economic development. For example, the data center construction will bring some tax revenues, and after the completion, there will be a steady stream of tax dollars for the local government. But on the other hand, the natural resources, as you mentioned, millions of gallons of water a day could be an issue. Right now, I heard that the local water utility says it just takes up, like 1% of the total water supply. But I would say probably they are comparing water consumption with the water withdrawal, because they supply the water to residents, to other industries, but those usage is mostly water withdrawal because they just return the water immediately back to the supply. However, when data center takes the water, they're just evaporating water, most of the water will be evaporated. So, I think it's not really the right metric to compare when they say 1%. So, we need to have like the same metric when we do the comparison. This 1% water withdrawal by data centers could mean that the water consumption is roughly 5 to 10%.

O'NEILL: And now Professor the companies that created and operated these AI systems, they themselves have an interest in making the technology more efficient. So, what kind of possible improvements in AI technology could make it more energy or water efficient over time?

REN: Right, so they definitely have the incentive to reduce the energy consumption, reduce the resource consumption for training and inference. And we actually have seen a lot of research proposals and solutions that promise to reduce the energy consumption, but it turns out that in reality, the systems are not that optimized. Because I saw a paper from a top tech companies research team and it shows that the energy consumption is like 10 times higher than what we thought before, even though they're using state of the art optimization techniques. So, right, they do have the incentive to reduce the energy and resource usage for AI computing. However, the real world is a different story, and partly because they have strict service level objective to meet, which means they need to return the responses to users in a short amount of time and that sort of limits how well they could optimize their system. If they are just doing batch processing, yeah, they could be very energy efficient, but it turns out, in reality, there are a lot of constraints which prohibits them from using those optimization techniques. So maybe we can compare the bus versus a car, a passenger car. So, in general, per passenger wise, the bus should be more energy efficient than a passenger car, assuming the bus is fully loaded. But in reality, due to the user request random patterns and some other constraints the bus is not fully loaded at all. So, if you have like 50 passenger bus, usually it's just loaded for five passengers, and on average per passenger, fuel efficiency is much worse than the passenger car.

Shaolei Ren is an Associate Professor of Electrical and Computer Engineering at the University of California, Riverside. (Photo: Courtesy of Shaolei Ren)

O'NEILL: AI has become a really immense part of many people's day to day lives. It's supposed to make our lives easier, but it comes at this sort of tremendous cost to the environment. What's the solution here? If the technological advances aren't working out the way we're hoping they will, what's the fix?

REN: I think one of the potential fixes is, instead of using larger and larger models we could be using smaller and smaller models, because usually those smaller models are good enough to complete many of the tasks that we actually care about. For example, if you just want to know the weather, want to know some summary of text, using smaller model that's usually good enough. And using smaller model means you're going to save a lot of lot of resources and energy consumption. And sometimes you could even render small models on your cell phone, and that can further save the energy by, say, 80% very easily, compared to running a larger model on the on the cloud.

O'NEILL: Shaolei Ren is an Associate Professor of Electrical and Computer Engineering at the University of California Riverside. Professor, thank you so much for taking the time with me today.

REN: Thanks for having me.

Links

The Washington Post “The Hidden Environmental Cost of Using AI Chatbots”

Fortune “AI Doesn’t Just Require Tons of Electric Power. It Also Guzzles Enormous Sums of Water.”

Bloomberg | “In Memphis, an AI Supercomputer from Elon Musk Stirs Hope and Concern”

The Atlantic | “AI is Taking Water from the Desert”

Yale Environment 360 | “As Use of A.I. Soars, So Does the Energy and Water It Requires

Living on Earth wants to hear from you!

Living on Earth

62 Calef Highway, Suite 212

Lee, NH 03861

Telephone: 617-287-4121

E-mail: comments@loe.org

Newsletter [Click here]

Donate to Living on Earth!

Living on Earth is an independent media program and relies entirely on contributions from listeners and institutions supporting public service. Please donate now to preserve an independent environmental voice.

NewsletterLiving on Earth offers a weekly delivery of the show's rundown to your mailbox. Sign up for our newsletter today!

Sailors For The Sea: Be the change you want to sea.

Sailors For The Sea: Be the change you want to sea.

The Grantham Foundation for the Protection of the Environment: Committed to protecting and improving the health of the global environment.

The Grantham Foundation for the Protection of the Environment: Committed to protecting and improving the health of the global environment.

Contribute to Living on Earth and receive, as our gift to you, an archival print of one of Mark Seth Lender's extraordinary wildlife photographs. Follow the link to see Mark's current collection of photographs.

Contribute to Living on Earth and receive, as our gift to you, an archival print of one of Mark Seth Lender's extraordinary wildlife photographs. Follow the link to see Mark's current collection of photographs.

Buy a signed copy of Mark Seth Lender's book Smeagull the Seagull & support Living on Earth

Buy a signed copy of Mark Seth Lender's book Smeagull the Seagull & support Living on Earth